Matrix——Catalyst for the AI Big Bang

The Era of AI

The concept of artificial intelligence was first brought up at a workshop at Dartmouth College in 1956. Since its birth, scientists and engineers have put in decades of research and development work, and numerous ideas and theories have been raised.

Today, AI has found its way into every aspect of our life. The popularity of self-driving cars has changed the transportation and logistics sectors. AI is helping doctors make more accurate diagnoses. AI-powered search engines now help us acquire knowledge and information. We can also train AI to read texts and take over custom service jobs. AI is also seeing ever-growing use in the financial sector, especially in long-term investing, short-term underwriting, undertaking and crediting.

As time goes on, AI will only develop at a faster pace, which we have seen in AlphaGo beating Lee Sedol after merely one year of training. In a recent computer vision experiment, AI algorithms were capable of recognizing over one thousand types of photos. In photo recognition tests, AI algorithms scored a 98% accuracy compared to a 95% by humans. This result is six times better than that of five years ago. Applying the same technology to clinical diagnosis, we have AI outdoing the best human doctors in diagnosing lung and thyroid cancers, and as an assistive tool, AI is already widely utilized in hospitals.

Blockchain-Empowered AI—AI That Cooperates with Humans

Will the future be like one of those science-fiction films where AI takes over the world and destroys mankind? This sets us pondering and keeps scientists searching for a solution. Tom Gruber, developer and co-founder of Siri, said, “What is the purpose of AI? To make our devices smarter so they can do jobs no one wants to do. Today, AI has beaten man in chess and weiqi. Maybe in the future, some super-AI will rule over mankind? No, AI shouldn’t compete with man, but we should cooperate. With the help of artificial intelligence, we should all have superhuman powers. For example, maybe people with cerebral palsy will be able to communicate with Siri, and Siri will also help us with a range of things from navigation to answering complicated questions.”

But how do we make sure AI cooperates, and not competes with man? Matrix AI Network has the answer. In its 1.0 version, Matrix uses AI to empower its blockchain, drastically improving its performance, security and social benefit. Now on to Matrix 2.0. This is an AI ecosystem managed using a high-performance blockchain.

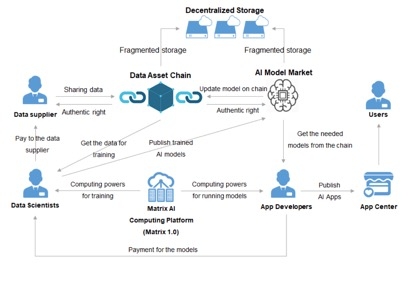

Picture: Blockchain-based AI Ecosystem as in Matrix 2.0

In this ecosystem, AI is managed and utilized based on a decentralized blockchain network, and the operation and decision-making of individual AI nodes are done through the consensus mechanism of the entire network. This structure is proof against the possibility of some awakened AI taking over the world. More importantly, AI nodes in this decentralized network can cooperate with each other to better serve us humans.

In the Era of AI, Everyone Will Own a Personal AI

To pave the way for the explosive growth of AI, we must lower its entry barrier first. This will bring about more diversity, and like in nature, diversity is always key to a vibrant ecosystem.

For this very purpose, Matrix is launching MANTA, a platform to drastically lower the entry barrier to AI. With its help, everyone can have his/her personal AI in the future. Corporate AI service providers are not the best choice for individualized AI services for two reasons:

1. Companies have the need to control production costs.

2. People will be reluctant to give companies access to their personal data.

So the ideal situation is where people train their own AI algorithms to serve their personal needs, and MANTA will help people turn this into a reality.

The Mechanism of MANTA

MANTA—MATRIX AI Network Training Assistant is a distributed auto-machine learning platform built on Matrix’s highly efficient blockchain. It is an ecosystem of AutoML applications based on distributed acceleration technology. This technology was originally used in the image categorization and 3D deep estimation tasks of computer vision. It looks for a high-accuracy and low-latency deep model using Auto-ML network searching algorithms and accelerates the search through distributed computing. MANTA will have two major functions: auto-machine learning and distributed machine learning.

Auto-Machine Learning—Network Structure Search

Matrix’s auto-machine learning adopts a once-for-all (OFA) network search algorithm. Essentially, it builds a supernet with massive space for network structure search capable of generating a large number of sub-networks before using a progressive prune and search strategy to adjust supernet parameters. This way, it can sample from the supernet structures of various scales but whose accuracy is reliable, and this can be done without the need to micro-adjust the sampled sub-networks for reducing deployment costs.

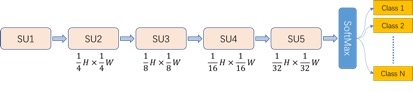

Take image categorization for example. Below is an illustration of the network structure MANTA adopts. This structure consists of five modules. The stride of the first convolutional layer of each module is set to 2, and all the other layers are set to 1. This means each SU is carrying out dimensional reduction while sampling multi-channel features at the same time. Finally, there is a SoftMax regression layer, which calculates the probability of each object type and categorizes the images.

Picture: Network Structure Module Using Image Categorization as an Example

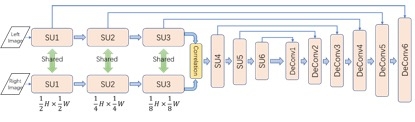

Meanwhile, the picture below is an illustration of the network structure MANTA adopts for deep estimation. There are six searchable modules in this structure. The first three SUs are mainly used to carry out dimensional reduction and subtract the multi-scale feature image from the left and the right views. There is a correlation layer in the middle for calculating the parallax of the left and the right feature images. The last three SUs are for further subtracting the features of the parallax. Subsequently, the network magnifies the dimension-reduced feature image through six transposed convolutional layers and correlates to the feature image produced by the six former SUs through a U link to estimate deep images of different scales.

Picture: Network Structure Module of Deep Estimation

Distributed Machine Learning—Distributed Data/Model Parallel Algorithm

MANTA will use GPU distributed parallel computing to further accelerate network search and training. Distributed machine learning on MANTA is essentially a set of distributed parallel algorithms. These algorithms support both data parallel and model parallel for acceleration. The purpose of distributed data parallel algorithms is to distribute each batch of data from every iteration to different GPUs for forward and backward computing. A GPU uses the same sub-model for each iteration. A model parallel is different from a data parallel in that for every iteration, MANTA allows different nodes to sample different sub-networks. After they each complete their gradient aggregation, the nodes will then share their gradient information with each other.

Distributed Data Parallel Algorithm Workflow

1. Sample four sub-networks from the supernet

2. Each GPU on each node of every sub-network carries out the following steps:

(1) Access training data at a number according to the set batch size;

(2) Broadcast the current sub-network forward and backward;

(3) Accumulate gradient information that is broadcast backward.

3. Aggregate and average the gradient information accumulated by all GPUs and broadcast them. (Carry out All Reduce.)

4. Every GPU uses the global gradient information it receives to update its supernet;

5. Return to step 1.

Distributed Model Parallel Algorithm Workflow (Taking Four Nodes for Example)

1. GPUs located on the same node sample the same sub-network model. We have four different sub-network models in total.

2. All GPUs located on the same node carry out the following steps:

(1) Access training data at a number according to the set batch size;

(2) Broadcast the sub-network sample from the node forward and backward;

(3) Calculate the gradient information that is broadcast backward;

(4) GPUs on the same node aggregate and average the gradient information and store the result in the CPU storage of the node.

3. The four nodes each aggregate and averages the gradient information it gets in step 2 and broadcast the result to all GPUs;

4. Every GPU uses the global gradient information it receives to update its super net;

5. Return to step 1.

Picture: MANTA Task Training Monitor Interface

New Public Chain of the AI Era

MANTA will take Matrix one step further towards a mature decentralized AI ecosystem. With the help of MANTA, training AI algorithms and building deep neural networks will be much easier. This will attract more algorithm scientists to join Matrix and diversify the AI algorithms and services available on the platform. Competition among a large number of AI services will motivate them to improve so that users will have more quality services to choose from. All of this will lay a solid foundation for the soon-to-arrive MANAS platform.

Secondly, MANTA will lower the entry barrier to AI algorithms. This will no doubt attract more developers and corporate clients to the Matrix ecosystem, which will further solidify its value and boost the liquidity of MAN.

Finally, as more people use MANTA, there will be greater demand for computing power in the Matrix ecosystem. For miners, this will bring them lucrative rewards and in turn attract more people to become computing power providers for Matrix, further expanding the scale of its decentralized computing power distribution platform. All this will help Matrix optimize marginal costs and provide quality computing power at a better price for future clients, and this forms a virtuous cycle.

MANTA is an vital piece in Matrix’s blueprint. It will fuel Matrix’s long-term growth and set the standard for other public chain projects.

Picture: The AI Industry in Rapid Growth

Market Value of MANTA

Whether for the burgeoning AI industry or for blockchain, MANTA is an epoch-making product with exceptional creativity and social benefit.

The Crypto Mining Disruptor

With the blockchain industry gaining huge traction, some people have claimed blockchain to be the single greatest invention of the 21st century. But despite its luring promises, this industry is also plagued by huge wastes of energy and computing power associated with mining. Forward-thinking individuals and organizations have been crying for “mining with public benefit”, and here comes MANTA to save the day.

Auto-Machine Learning is the core of MANTA. Not only does this AI technology make mining beneficial to the society, its distributed cloud computing also helps to put idle computing power to efficient use, thus saving us a lot of resources. In the future, we expect MANTA to inspire more PoW-based blockchain projects to redesign their consensus mechanism, or they may directly connect to MANTA. Hash computing will be replaced with a more socially beneficial way of mining.

The Catalyst for the Cambrian Explosion of AI

Today, most AI algorithms are trained through machine learning, which has two challenges:

High entry barrier. Like with the Internet, the explosive growth of the mobile economy was made possible only by the popularization of programming languages, which brought more software engineers than ever into designing mobile apps;Expensive computing power. Today, most companies and individuals have varying degrees of demand for AI services, but few can afford the high cost. The arrival of MANTA is expected to change this situation.

One core feature of MANTA is machine learning. This will effectively lower the barrier to machine learning and bring people with basic computer knowledge but no sophisticated AI background on board. This way, people with creative ideas will be able to design their own AI applications and services without having to become masters of AI programming. Small companies will be able to launch AI services without recruiting more staff. AI will see a diversity of new uses as more people participate.

The other core feature of MANTA is distributed learning based on idle computing power on Matrix’s network and marketplace. As idle computing power will be cheaper than what centralized providers can offer, companies will no longer need to spend a fortune building their own AI training platforms or renting such services. This cost-efficient way of training AI models will give companies an edge over competitors, and more and more corporate clients will be interested in what the AI market has to offer.

A lower entry barrier, more participants together with diversified algorithms and services, all thanks to MANTA, will pave the way for the Cambrian explosion of AI.

The Cornerstone for the Digital Society

The advent of the mobile economy has brought us into an increasingly digitalized society. Maybe in the not-too-distant future, everyone will have a digital version of himself/herself, and AI will be our most trustworthy assistant in the digital world.

Every client will appreciate services customized to his/her individual needs. The same is true when it comes to AI services, but due to cost efficiency limitations, this remains a dream, and MANTA aims to turn this into a reality. Since MANTA can deliver users from data privacy worries and the impossible task of mastering AI programming, it will enable people to train their own AI models and create personalized AI services. This will help us move into a highly efficient future society where everyone has access to personalized services, thus eliminating resource waste caused by service centralization.

Last updated