MANAS—Make a Better Metaverse

AI: The Cornerstone of the Metaverse

The Metaverse is a virtual world projected from the physical world using information technologies to serve as a digital space where the social activities of the future society take place. We don’t yet have a clear-cut definition of the word “Metaverse”, whose concept is fluid and ever-changing. Institutions and professionals also have varying definitions of the Metaverse, but so far most people would agree that the Metaverse is a series of Internet applications and new social forms integrating reality and virtual reality made possible by revolutionary information technologies such as IoT, VR, blockchain, HCI, AI, 5G/6G, etc. By wearing AR/VR devices, people can enter a 3D virtual world, and everything and everybody in the physical world can be projected into the Metaverse.

The Metaverse brings real and virtual worlds together into one economic, social and identity system where every user can create, live life, have fun and work.

There are many versions of the Metaverse people have envisioned, and AI is integral to all of them. Arguably, AI is the cornerstone of the Metaverse.

Meta’s AI Technologies for the Metaverse

As the re-inventor of the Metaverse concept, Meta is closely observed by the world in every step it takes in this new realm, especially those moves related to AI.

Until the company discloses more information, Meta’s second-quarter earnings report is the most useful knowledge we’ve got to work with. Meta lost 10.19 billion USD on Reality Labs and 6.62 billion USD in total in 2020. With so much spending on developing Metaverse-related technologies, the outcome seems a bit underwhelming. Just as investors and professionals start to sober up from the Metaverse hype, a recent tech breakthrough by Meta reinjects some confidence.

Meta has released new technologies including Builder Bot and CAIRaoke that aim to create the kind of conversational AI necessary to create virtual worlds.

1. Conversational environment generation tool Builder Bot: In the video, we can see Zuckerburg and a friend playing around in a virtual environment. Through a series of voice instructions, they were able to add clouds, trees, islands, desks, chairs and other virtual objects to this virtual environment. But this is merely a demo version of Builder Bot. To create a more complex 3D environment, more time and mental effort will be necessary.

2. Super AI assistant CAIRaoke: Its bottom layer is a self-monitored end-to-end neural network model, which is capable of understanding and learning the voices and body languages of users. This helps make our interaction with the voice assistant in the digital world smoother and more natural. In the demonstration video, CAIRaoke can keep track of the amount of salt used for cooking while keeping a conversation with users. Based on its observation, the AI assistant will remind users to include enough salt in their diet. Currently, this technology is already in use in video call portal devices. In the future, it will also be integrated into AR/VR products to improve people’s interactive experience with digital assistants.

3. Universal machine translation tool: Meta's universal machine translation technology aims to provide real-time audio-to-audio translation between any two languages. This AI translation tool will break the barrier between people speaking different languages. A language we don’t know will no longer keep us from communicating.

Besides the above, Meta also released other AI tools, which, together with the AI supercomputer Meta introduced previously, will bring endless potential to the Metaverse as they mature and find practical applications.

As the passageway to the new era of technologies, the Metaverse is quickly binding itself with AI, and their integration will bring forth content richer and more vivid than we can imagine. Smart companions, smart interaction, as well as the generation and construction of settings and environments among others are great examples of AI’s contribution to making the Metaverse infrastructure smarter. AI has taken over a large amount of Metaverse infrastructure and user orientation work and lightened the load on human employees. By improving human-machine interactions, AI has helped to create better experiences for users, thus creating value in the virtual world rather than our physical world.

AI is empowering the Metaverse to go faster and further. In other words, the Metaverse may one day become something like the Oasis in Ready Player One.

MANAS: The Key to Digital Synesthesia

AI is making its transition from perceptual intelligence to cognitive intelligence. The current consensus in the industry is that the development of AI has three stages: computational intelligence, perceptual intelligence and cognitive intelligence. Computational intelligence is a machine’s capacity to store information and compute. The weiqi match between AlphaGo and Lee Sedol is a perfect example of AI’s computational intelligence. AI’s ability in this aspect has far exceeded that of human beings. Perceptual intelligence is a machine’s ability to listen, talk, observe and recognize. In this aspect, AI is almost on par with humanity. AI voice recognition is already capable of imitating human voice perfectly and understanding a dozen different languages. Face recognition can isolate one person from a group of hundreds or even thousands of people. All this means that AI is at least equal to human beings in its perceptual capacity. Not to mention that in certain areas it clearly does a better job. Cognitive intelligence is a machine’s capacity to use its understanding of human languages and common sense to deduce and reason. It is an advanced level of AI revolving around the ability to do step-by-step deduction and make accurate judgments using common sense. Today, AI’s cognitive intelligence is still far behind what humans are capable of. In the future, with breakthroughs in brain-machine interface, AI may finally be able to match humans in cognitive intelligence.

In the Metaverse, users will be able to perceive the digital world through five senses. To make things easier to understand, we call this synesthesia. The original meaning of synesthesia is to break the boundaries between sight, smell, touch, hearing and taste and make these senses interchangeable so that one’s perception is richer and fuller. In the Metaverse, digital synesthesia is achieved by AI and machines enabling our bodies to experience the virtual world more vividly than is possible in the physical reality, to express and receive the warmth of emotions. Language is at the bottom layer of digital synesthesia. Hearing is the basis of verbal interactions. While digital sight (VR) and touch (sensory equipment) enable our bodies to feel the texture of the virtual world. The integration and synergy of all these virtual senses will make for a virtual experience surpassing what can be experienced in reality.

Therefore, the development speed of AI, especially that of cognitive intelligence will directly affect how fast the Metaverse matures. As a newborn concept, the Metaverse doesn’t yet have a clear definition, but it does have some distinguishing features, which include concrete digital identity, multi-model perception immersiveness, low latency, a multi-dimensional virtual world, accessibility from anywhere, as well as a complete economic and social system. Due to these features, the Metaverse will create large quantities of data and consume large quantities of computing power. High bandwidth networks, Metaverse devices everywhere in all shapes and forms, as well as natural and intuitive human-machine interactions, will demand smarter algorithms to process in order to keep up with the growth of the Metaverse and its number of users. The success of the Metaverse, in particular, will depend on human-machine interaction, which has to do with user experience, as well as large-scale dataset processing, which affects the governance model of the Metaverse. Breakthroughs in these two areas will depend on AI. Therefore, how fast AI develops will affect the progress of digital synesthesia, which in turn will decide how soon the Metaverse will take off.

MANAS will provide the necessary solutions for a better digital synesthesia for users.

Object Recognition: A Boost to the Efficiency of Digital Synesthesia

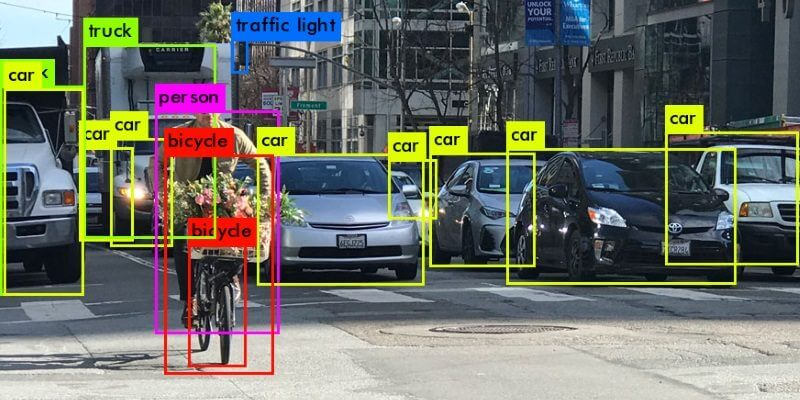

Powered by one of MANAS’s core algorithms, object recognition will play a central role in improving digital synesthesia for the Metaverse.

As the Metaverse is an open digital world, it will contain a large number of items and content that aren’t native to the Metaverse. Users won’t be able to identify these based on Metaverse’s native database, and due to their large quantity, users can’t reliably distinguish them using experience and five senses either. Efficiency is paramount to living in a digital world. Therefore, we will need some external AI services for assistance. One good example is MANAS’s image recognition technology, which greatly improves the efficiency of living in the Metaverse by helping users identify an assortment of objects and content. It also supports customizing services through secondary programming to achieve functions such as automatically categorizing objects that have been recognized, adding them to one’s own database and taking actions accordingly. For instance, after recognizing a friend, you will automatically send them a greeting. After recognizing danger, you will automatically avoid them.

At the same time, MANAS’s object recognition can serve as the underlying algorithms for other customized advanced user features. For instance, the auto-capture feature based on MANAS object recognition can single out specific objects from a video and take a screenshot of them. This feature will help people capture those fleeting moments of beauty that happen in the Metaverse.

Thanks to MANAS, digital synesthesia will continue to evolve to enrich people’s experience in the Metaverse.

MANAS: An All-Powerful Assistant for the Metaverse

Service Upgrades Made Possible by the Metaverse

Throughout history, all revolutions in the service industry have been preceded by tech innovations. The popularization of the Internet has brought the service industry online. In 1999, China Merchants Bank rolled out online banking, allowing users to make payments and transfers anywhere and anytime. In recent years, mobile Internet has made service accessible in more scenarios. You can order a McDonald’s takeout on your phone and pick it up from a drive-through without getting off your car. The Metaverse will also break through the boundaries of our current imagination and create endless possibilities.

In the Metaverse, you can create a hamburger of unique flavour for yourself to enjoy, instead of buying it from someone else. Do you need to be a master of culinary arts to do that? Absolutely not.

In the Metaverse, to be creative, all you need is imagination. Leave the rest to the platform! Anything within the power of your imagination can easily be realized, just like in Inception an architect can raise buildings that defy the laws of physics. The hamburger you create online will immediately get 3D printed for you offline so you can enjoy the delicious food of your own creation.

Secondly, sharing will be an important part of the Metaverse. Creation is only step one. The experience is not complete if we don’t share the hamburger with friends. Some people doubt whether taste can be replicated in the Metaverse, as it is a more subtle and complicated sense compared with sight and touch. Can the culinary styles of all different regions in A Bite of China really be replicated in the Metaverse?

The brain in the vat is a thought experiment that has shown us that all senses are the result of nerve pulses stimulating the brain. Taste is also a series of nerve pulses that can be separated and replicated with electric signals in the Metaverse. When brain-machine interfaces are eventually invented in the future, we won’t even need to send stimuli to the taste buds but directly to the neural system, and one will taste the flavour of a hamburger in his mouth. With this ultimate level of customized service, restaurants aren’t likely to receive any complaints. At most, people will complain that there isn’t enough computing power to keep up with the speed of their imagination.

The Metaverse is the perfect medium for the service industry.

Firstly, both share one same ultimate pursuit: experience. The Metaverse provides the venues for experiences, which are magnified to the maximum. The integration of the real and the virtual adds to the experience and makes it all the richer. Services will be accessible anywhere and anytime in the Metaverse like air and water. They will be essential, and the service industry will upgrade into what we call Metaservice.

Furthermore, the purpose of both the Metaverse and the service industry is to supplement lackings. If a product is flawless, there won’t be room for supplementary services. The purpose of the Metaverse is also to supplement the real world. One predominant feature of the Metaverse is that it’s demand-based. In contrast to the industrial era when assembly lines churn out generic products, the Metaverse will usher in the era of customized services.

MANAS: Solution to Metaverse Services

In the future golden age of the Metaverse, services will have two features: customizability and huge demand. To make this happen, the majority of these services won’t come from humans but from AI.

Different from centralized platforms, MANAS adopts a C2C business structure. This is a fully competitive market, which, for users, means better prices. Meanwhile, MANTA’s Auto-ML lowers the barrier to developing services for MANAS. The distributed Matrix AI Network provides scientists with affordable computing power, which also helps keep the pricing competitive for services on MANAS.

As a C2C platform, this platform will be demand-driven. Different from centralized platforms, personalized needs and niche markets can find satisfying solutions here. This way, AI will benefit every aspect of the Metaverse.

MANAS will provide the perfect solutions for the huge amounts of personalized demand in the Metaverse, thus enabling people to better enjoy life in a digital world as well as all the benefits AI creates.

MANAS: Make a better Metaverse

MANAS has already made its APIs public so any Metaverse platform can access AI services on MANAS through these APIs and repurpose MANAS services for the platform’s own users. At the same time, Matrix is actively seeking partnerships with all sorts of Metaverse platforms to gather more Metaverse data to fuel the development of MANAS.

The Metaverse is an industry that’s in rapid growth. It is bound to produce more data than the real world, which could never generate data from all 360 degrees as the Metaverse do. The huge quantities of data make the Metaverse a better training ground for MANAS than the real world. This will help MANAS attract more algorithm scientists and create better AI services. In other words, MANAS will grow as the Metaverse grows. With enough training, Metaverse-born AI will eventually surpass real-world AI and apply itself to scientific research scenarios. For instance, by creating a spacecraft in the Metaverse, we can gain valuable knowledge about spacecraft construction in the real world. Of course, to do this, we need to base the Metaverse data model on the real world, but still, the originality and flexibility of technologies in the Metaverse can’t be matched in the real world. This process will doubtless drive the development of real-world technologies forward.

MANAS will continue to benefit the Metaverse and users in the course of time. Today, we can already use MANAS’s object recognition to improve digital synesthesia and voice recognition to make interactions more efficient in the Metaverse. In the future, as MANAS attracts more and more AI talent to create top-notch AI algorithms and services, the Metaverse will become a better and better experience for users.

Last updated